Epistemic Security briefing: Addressing platform design to mitigate online harms

Demos’ response to the Science, Innovation and Technology Committee’s report on social media, misinformation and harmful algorithms

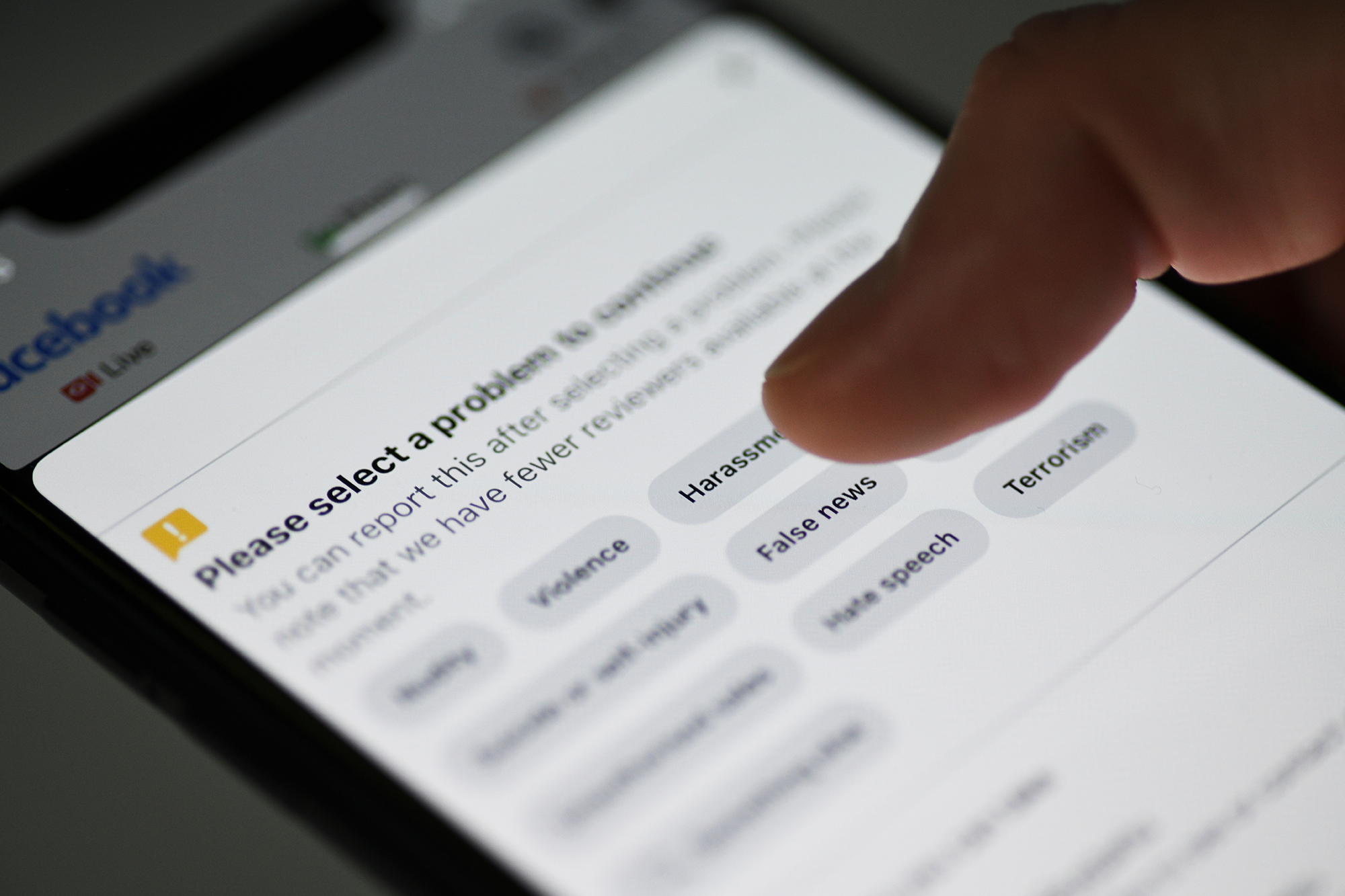

The Science, Innovation and Technology (SIT) Committee has published its inquiry on Social media, misinformation and harmful algorithms. We welcome the report: it is a thorough examination of how platforms amplify unreliable and hateful content, spurring offline violence like 2024’s Southport riots. These risks to the UK’s information supply chains pose a fundamental challenge to our epistemic security and democracy. This briefing responds to the inquiry by examining four of its cross-cutting recommendations:

- Require platforms to conduct and act on risk assessments:

The Committee recommends that the government should require digital platforms to conduct and act on risk assessments for ‘legal but harmful’ content. These changes would significantly expand the Online Safety Act’s existing system of risk assessments for illegal harms to include legal but harmful content. While we support the aim behind these proposals, we also highlight issues with the SIT report’s approach which need clarification.

- Establish the ‘right to reset’ and other user controls

The SIT report calls for users to be given more control over how platforms operate. This includes a proposal to require platforms to give users the ‘right to reset’ their recommendation algorithms. The proposal is an innovative way to provide users with more agency and is already in place on platforms like TikTok and Instagram. However, any right to reset needs to come with strong requirements to make sure it is easy to use and understand. We also suggest exploring additional user controls, such as a ‘right to decide’ between content recommendation algorithms.

- Provide researchers with better access to platforms’ data

The Committee identifies that independent researchers lack access to high-quality data on platforms’ inner workings, which makes it difficult to conduct online safety research. They call for social media and generative AI platforms to be required to provide government-commissioned online safety researchers with “full” access to their internal data. Although we agree with the Committee that researchers must be given greater data access, this access should not be restricted to just researchers commissioned by the government. Building on recent Ofcom proposals, based on measures in the Data Use and Access Act, we have called on the government to mandate all platforms to make their data available not just to researchers but also to vetted public interest researchers, including civil society organisations who play a crucial role in identifying harmful content.

- Fund and conduct more research

The Committee highlights gaps in the knowledge-base on how recommendation algorithms work and the effectiveness of different content moderation systems. They suggest the government should commission independent researchers to examine both issues. We agree with the aim behind these recommendations and have called for more research funding previously. Nevertheless, the research’s independence could be undermined if the funding is granted by the UK government directly. To avoid this, the funding should be granted by bodies who already fund or publish independent research, such as UKRI or Ofcom.

The government and regulators should take heed of the Committees’ findings, as well as the calls for action made by Demos and other civil society organisations. We look forward to September 11th, when we expect the UK government’s response to the inquiry.